Oml

A Stats and ML library in OCaml

Leonid Rozenberg,

Compose Conference,

Slides are available here

http://rleonid.github.io/slides/oml/compose2016/index.html

Who am I?

- Software/ML dude at the Hammer Lab within the

Icahn Institute for Genomics and Multiscale Biology

.

Working at the intersection of biology, computers and math.

.

Working at the intersection of biology, computers and math.- Strong advocates of open source.

- Background in finance.

- I'm a type addict

Array.fold_left... leads to thinking.:set +tin my~/.ghci

What am I selling?

- Get involved (try it out, file an issue, share opinions ...)

- There are lots of interesting problems in this domain.

- It is important to get this right.

Getting started.

Install:

$ opam install oml

Try it out in utop:

# #require "oml";; # #show_module Oml ;; module Oml : sig module Util : sig end module Uncategorized : sig end module Statistics : sig end module Online : sig end module Classification : sig end module Regression : sig end module Unsupervised : sig end end

A quick tour part 1.

Util a Pervasive's like module:

module Util : sig type iterative_failure_reason = | Out_of_bounds of float | No_convergence | Too_many_iterations of int | Too_few_iterations of int exception Iteration_failure of string * iterative_failure_reason val invalidArg : ('a, unit, string, 'b) format4 -> 'a val pi : float val midpoint : float -> float -> float module Array : sig end val dx : float val significantly_different_from : ?d:float -> float -> float -> bool val equal_floats : d:float -> float -> float -> bool val is_nan : float -> bool val is_degenerate : float -> bool type 'a bound = Open of 'a | Closed of 'a val within : 'a bound * 'a bound -> 'a -> bool module Float : sig end module type Optional_arg_intf = sig type opt val default : opt end val fst3 : 'a * 'b * 'c -> 'a val snd3 : 'a * 'b * 'c -> 'b val thr3 : 'a * 'b * 'c -> 'c end val Array.sumf : float array -> float

A quick tour part 2.

Uncategorized: a work in progress

# show_module Uncategorized ;; module Uncategorized : sig module Continued_fraction : sig end module Functions : sig end module Lacaml_util : sig end module Svd : sig end module Estimations : sig end module Solvers : sig end module Vectors : sig end module Matrices : sig end end # open Uncategorized ;; # Functions.regularized_lower_gamma ;; - : a:float -> float -> float = <fun> # Solvers.newton_raphson_full ;; - : ?init:float -> accuracy:float -> iterations:int -> lower:float -> upper:float -> ~f:(float -> float) -> ~df:(float -> float) -> float = <fun> # #show_module Svd ;; module Svd : sig type t val u : t -> float array array val s : t -> float array val vt : t -> float array array val svd : ?copy:bool -> Lacaml.D.mat -> t val solve_linear : ?lambda:float -> t -> Lacaml.D.vec -> Lacaml.D.vec val covariance_matrix_inv : ?lambda:float -> t -> Lacaml.D.mat val looe : t -> Lacaml.D.vec -> float -> Lacaml.D.vec val h : t -> Lacaml.D.mat val h_diag : t -> Lacaml.D.vec end

A quick tour part 3.

Online: Stable mean and standard deviation for folding over data.

# #show_module Online ;; module Online : sig type t = { size : int; last : float; max : float; min : float; sum : float; sum_sq : float; mean : float; var : float; } val empty : t val init : ?size:int -> float -> t type update = { n_mean : float; n_var : float; } module type Update_rules = sig val apply : size:float -> n_sum:float -> n_sum_sq:float -> n_size:float -> t -> float -> update end module Make : functor (Update : Update_rules) -> sig end val update : ?size:int -> t -> float -> t val join : t -> t -> t end

Unsupervisied : Start of unsupervised methods.

# #show_module Unsupervised.Pca ;; module Pca : sig type t val variances : t -> float array val components : t -> float array array val scalings : t -> (float * float) array val pca : ?demean:bool -> ?scale:bool -> ?unbiased:bool -> Lacaml.D.mat -> t type pca_reduction_method = Num_comp of int | Varience_exp of float val reduce : t -> pca_reduction_method -> t end

A quick tour part 4.

Statistics When you need to get dirty with data

# #show_module Statistics ;; module Statistics : sig module Descriptive : sig end module Distributions : sig end module Hypothesis_test : sig end module Measures : sig end module Sampling : sig end end # # Descriptive.kurtosis ;; - : float array -> float = <fun> # # Distributions.poisson_cdf ;; - : mean:float -> float -> float = <fun> # # Hypothesis_test.mean_t_test ;; - : float -> Hypothesis_test.null_hypothesis -> float array -> Hypothesis_test.t = <fun> # # Sampling.normal ;; - : ?seed:int array -> mean:float -> std:float -> unit -> float Sampling.generator = <fun>

A quick tour part 5 (not so quick aye?)

Regression Fitting linear model

# #show_module Regression ;; module Regression : sig module Intf : sig end (* Interfaces constructed by modules below *) module Univariate : sig end module SolvedLPViaSvd : sig end module Eval_multivariate : sig end module Multivariate : sig end module Tikhonov : sig end end

Classification : learn probabilistic associations between features and classes

# #show_module Classification ;; module Classification : sig module Probabilities : sig end module Intf : sig end (* Interfaces constructed by NB and LR *) module Naive_bayes : sig end module Logistic_regression : sig end module Performance : sig end end # #show_module Classification.Logistic_regression ;; module Logistic_regression : sig module Binary : functor (D : Intf.Continuous_encoded_data) -> sig end module Multiclass : functor (D : Intf.Continuous_encoded_data) -> sig end end # #show_module Classification.Naive_bayes ;; module Naive_bayes : sig module Binomial : functor (D : Intf.Dummy_encoded_data) -> sig end module Categorical : functor (D : Intf.Category_encoded_data) -> sig end module Gaussian : functor (D : Intf.Continuous_encoded_data) -> sig end end

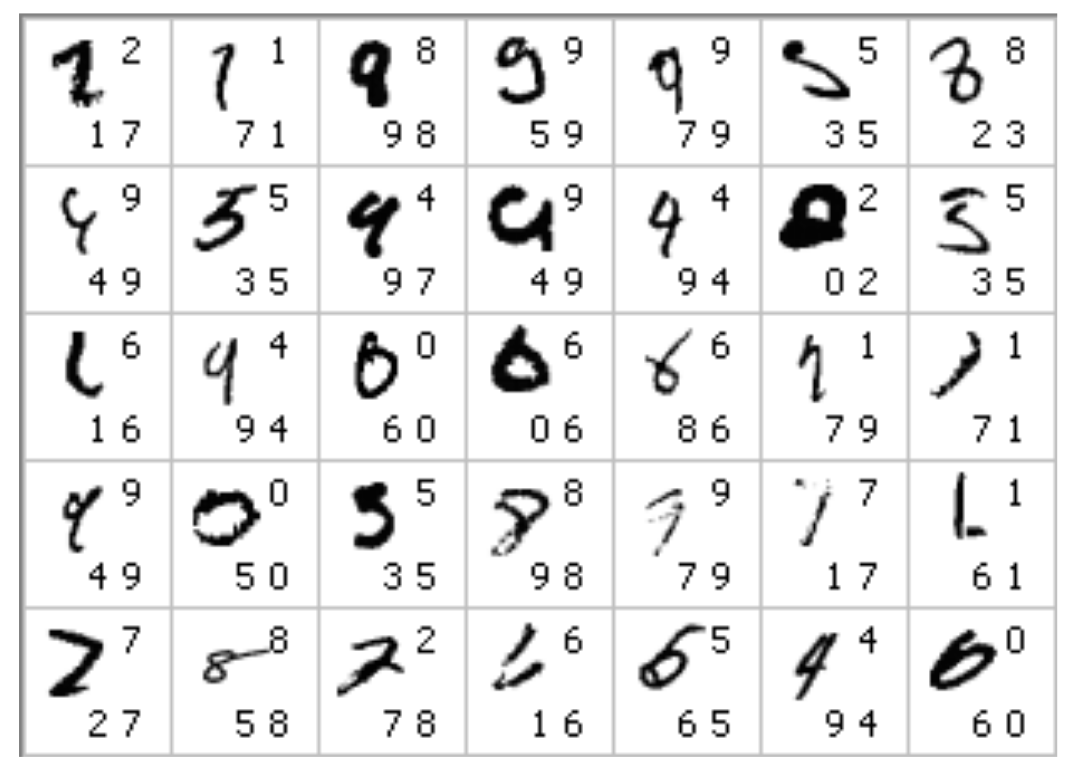

Demo time: MNIST

MNIST : http://yann.lecun.com/exdb/mnist/ Famous data set of handwritten digits collected by NIST and Modified by Yann LeCun, Corinna Cortes and Christopher J.C. Burges.

Demo time: MNIST, approach

- Let's use Multiclass Logistic Regression.

- just a minimization of a cost function:

- Not that kind of conference!

- A result of a

functor# open Classification;; # #show_module_type Intf.Continuous_encoded_data ;; module type Continuous_encoded_data = sig type clas type feature val encoding : feature -> float array val size : int end # #show_module Logistic_regression.Multiclass ;; module Multiclass : functor (D : Intf.Continuous_encoded_data) -> sig type clas = D.clas type feature = D.feature type opt val default : opt type t val eval : t -> feature -> clas Probabilities.t type samples = (clas * feature) list val estimate : ?opt:opt -> ?classes:clas list -> samples -> t val opt : ?lambda:float -> ?tolerance:float -> unit -> opt val coefficients : t -> float array array val class_order : t -> clas list end

Demo time: MNIST. load the data

#require "dsfo" ;; Mnist.download `Train ;; Mnist.download `Test ;; let m_train = Mnist.data `Train ;; let m_test = Mnist.data `Test ;; val m_train : (float, Bigarrayo.float64_elt, Bigarrayo.fortran_layout) Bigarrayo.A2.t = C1 C2 C3 C59998 C59999 C60000 R1 0 0 0 ... 0 0 0 R2 0 0 0 ... 0 0 0 R3 0 0 0 ... 0 0 0 ... ... ... ... ... ... ... R792 0 0 0 ... 0 0 0 R793 0 0 0 ... 0 0 1 R794 0 0 0 ... 0 0 0 val m_test : (float, Bigarrayo.float64_elt, Bigarrayo.fortran_layout) Bigarrayo.A2.t = C1 C2 C3 C9998 C9999 C10000 R1 0 0 0 ... 0 0 0 R2 0 0 0 ... 0 0 0 R3 0 0 0 ... 0 0 0 ... ... ... ... ... ... ... R792 1 0 0 ... 0 0 0 R793 0 0 0 ... 0 0 0 R794 0 0 0 ... 0 0 0

Demo time: MNIST, visualize

# showme 12312 ;; - : (int, Bigarrayo.int8_unsigned_elt, Bigarrayo.fortran_layout) Bigarrayo.GA.t * int = ( C1 C2 C3 C4 C5 C6 C7 C8 C9 C10 C11 C12 C13 C14 C15 C16 C17 C18 C19 C20 C21 C22 C23 C24 C25 C26 C27 C28 R1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 R2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 R3 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 R4 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 R5 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 R6 0 0 0 0 0 0 0 0 0 0 0 0 0 0 108 235 241 241 241 120 0 0 0 0 0 0 0 0 R7 0 0 0 0 0 0 0 0 0 0 15 109 228 228 255 253 253 253 253 248 121 0 0 0 0 0 0 0 R8 0 0 0 0 0 0 0 0 22 81 179 253 253 253 254 253 253 253 253 253 213 0 0 0 0 0 0 0 R9 0 0 0 0 0 0 0 22 187 253 253 253 253 218 187 186 186 243 253 253 213 0 0 0 0 0 0 0 R10 0 0 0 0 0 0 0 179 253 253 253 247 192 31 0 0 0 116 253 253 213 0 0 0 0 0 0 0 R11 0 0 0 0 0 0 0 214 253 253 253 226 0 0 0 0 0 172 253 253 213 0 0 0 0 0 0 0 R12 0 0 0 0 0 0 0 79 128 198 93 83 0 0 0 0 10 218 253 253 213 0 0 0 0 0 0 0 R13 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 102 253 253 253 135 0 0 0 0 0 0 0 R14 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 228 253 253 225 38 0 0 0 0 0 0 0 R15 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 242 254 254 254 137 0 0 0 0 0 0 0 0 R16 0 0 0 0 0 0 0 0 0 0 0 0 0 0 171 253 253 253 253 216 108 108 45 0 0 0 0 0 R17 0 0 0 0 0 0 0 0 0 0 0 10 94 164 254 253 253 253 253 253 253 253 231 45 0 0 0 0 R18 0 0 0 0 0 0 0 0 22 81 172 218 253 253 254 253 253 253 253 253 253 253 253 162 0 0 0 0 R19 0 0 0 0 0 0 61 110 215 253 253 253 253 253 254 253 253 253 253 253 253 253 253 218 0 0 0 0 R20 0 0 0 0 0 108 247 253 253 253 253 253 253 253 229 143 66 66 66 66 66 66 66 31 0 0 0 0 R21 0 0 0 0 20 243 253 253 253 253 253 253 251 142 42 0 0 0 0 0 0 0 0 0 0 0 0 0 R22 0 0 0 0 206 253 253 253 253 253 235 212 88 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 R23 0 0 0 0 170 240 240 240 240 140 34 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 R24 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 R25 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 R26 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 R27 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 R28 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0, 2)

Demo time: MNIST, encode the data:

let feature_size = 28 * 28 module MnistEncoded = struct type clas = int type feature = float array let encoding arr = arr let size = feature_size end ;;

Demo time: MNIST, plumbing

let column_to_label c = let rec loop i = if c.{i + feature_size} = 1.0 then i else loop (i + 1) in loop 1 let to_samples data = Array.init (Mat.dim2 data) (fun i -> let col = Mat.col data (i + 1) in let ftr = copy ~n:MnistEncoded.size col |> Vec.to_array in let lab = column_to_label col in lab, ftr) |> Array.to_list let s_train = to_samples m_train let s_test = to_samples m_test let tolerance = if not (!Sys.interactive) && Array.length Sys.argv = 2 then float_of_string Sys.argv.(1) else 1e7

Demo time: MNIST, apply the functor and train

# module MnistLr = Logistic_regression.Multiclass(MnistEncoded) ;; module MnistLr : sig type clas = MnistEncoded.clas type feature = MnistEncoded.feature type opt = Logistic_regression.Multiclass(MnistEncoded).opt val default : opt type t = Logistic_regression.Multiclass(MnistEncoded).t val eval : t -> feature -> clas Probabilities.t type samples = (clas * feature) list val estimate : ?opt:opt -> ?classes:clas list -> samples -> t val opt : ?lambda:float -> ?tolerance:float -> unit -> opt val coefficients : t -> float array array val class_order : t -> clas list end # let mnist_lr = MnistLr.(estimate ~opt:(opt ~tolerance ()) s_train)

Demo time: MNIST, evaluate on test data

# let perf_test = s_test |> List.map (fun (c, f) -> c, P.most_likely (MnistLr.eval mnist_lr f)) ;; val perf_test : (int * MnistLr.clas) list = [(8, 8); (3, 3); (2, 2); (1, 1); (5, 5); (2, 2); (5, 5); (10, 10); ...] # let correct_test = List.fold_left (fun c (a,p) -> if a = p then c + 1 else c) 0 perf_test;; val correct_test : int = 9263 # let total_test = List.length perf_test;; val total_test : int = 10000 # Printf.printf "test %0.3f correct\n" ((float correct_test) /. (float total_test));; test 0.926 correct

Three Lessons for OCaml: 1.

local open & overloading = cheap DSL

let circle_area r = Float.(pi * r * r) let norm x = sqrt Vector.(x**`T * x)

Three Lessons for OCaml: 2.

Separate optional arguments into a separate type:

module Capability : sig type optional_args val opt : ?foo:float -> ?bar:[`Whatever | `Something ] -> unit -> optional_args val default : optional_args (* let default = opt () *) val work : ?o:optional_args -> [`Other | `Required | `Args ] -> int end

Three Lessons for OCaml: 3 ~ kind of.

- Cheating heuristic: to write fast OCaml, write "C"-Caml.

- Pretend that you're writing

C(unroll).

let sum1 arr = Kahan.sum (Array.fold_left Kahan.update Kahan.empty a) let sum2 arr = let c = ref 0. in let s = ref 0. in for i = 0 to Array.length a - 1 do let x = a.(i) -. !c in let ns = !s +. x in c := (ns -. !s) -. x; s := ns; done; !s

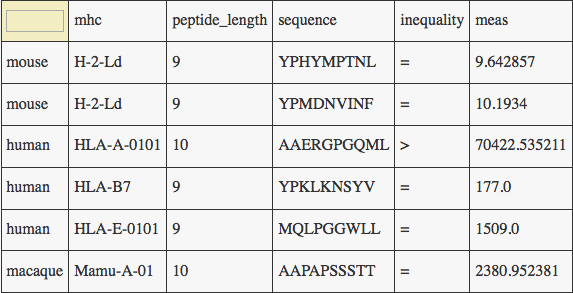

Three Reasons to use types in ML: Problem Domain

type amino_acid = | A | C | D | E | F | G | H | I | K | L | M | N | P | Q | R | S | T | V | W | Y type bs = | Binder | NonBinder type ord = Eq | Lt | Gt type transforms = | NoOp | Log (* natural logarithm *) | Nielson of float (* max 0 (1.0 - log b / log cap *) | NielCon of float

Three Reasons to use types in ML: Specificity

We can be precise

Yes, a statistician knows how to use

Randscipybut would a non-expert know? For example: Suppress the intercept in regression in R:

model <- fit(y ~ 0 + x1 + x2, data = bar) model <- glm(y ~ x1 + x2 - 1, data = bar)

or Oml

# Rm.(regress ~opt:(opt ~add_constant_column:false ())) ;; Rm.input array -> resp:float array -> Rm.t = <fun>

Three Reasons to use types in ML: Typeful transformations

- regression, classification (... other methods) aren't objects:

- Functors. Typeful transformations.

- Step 1.

val linear_regress : float array -> float array -> floatval classifier : (clas * feature) list -> (feature -> clas Probabilities.t)- Really step 0.1

- Step 2. Dependent types?

- Normally distributed errors.

- Data bounded to specific ranges.

Three Reasons to use types in ML: 4a.

Types can solve "META" problems: ex. Training vs Validation vs Testing

(* type of data *) type training type validating type testing (* type of models *) type trained type validated module type Machine_learning_pipeline = sig type 'a t type 'a data val train : training data -> trained t val validate : validating data -> trained t -> validated t val test : testing data -> validated t -> float end

Testing via properties

- Our functions are {float} -> {float}

- Float are not reals

- Violate almost every property of the field of Reals

- Maybe not additive inverses, commutative?

- At the moment the best that we have

- Very large domains and codomains, what do we do?

- Construct probabilistic tests that fail!

- small percent (1%) of the time

- Based on |Boundary| * |Precision| * |Size|

Testing observations

- If probabilistic, failure is important!

- How do you test two methods that sum an array of floats?

let kahan_test arr = let copy = false in let nsum = Array.fold_left (+.) 0. in let vals = Array.init permutations (fun _ -> let sum_me = permute ~copy arr in nsum sum_me, sumf sum_me) in let naive_sums, kahan_sums = Array.unzip vals in let make_set = Array.fold_left (fun s e -> Fs.add e s) Fs.empty in let uniq_naive = make_set naive_sums |> Fs.cardinal in let uniq_kahan = make_set kahan_sums |> Fs.cardinal in uniq_kahan <= uniq_naive)

Testing observations: WIP

- Difficult to structure.

- Different dependencies:

- Util < Statistics < Regression

- Util < Regression < Statistics

- Difficult to convey to user.

Thanks and questions.

- Hammer Lab - www.hammerlab.org

- Jeff Hammerbacher

- Sebastien Mondet

- Isaac Hodes

- Carmelo Piccione

- Shoulders of giants:

- Markus Mottl,

Lacaml - Christophe Troestler,

L-BFGS

- Markus Mottl,

Presentation Credit

Origin of Oml (Ocaml Math Library)

- First things first; the name!

- Hardest problem in computer science

- Started amassing methods over my career.

- Using

SVDfor regression - Kept rewriting Naive Bayes

- Can't remember the rules for performing inference tests.

- Using

- I'm a type addict

Array.fold_left... leads to thinking.:set +tin my~/.ghci

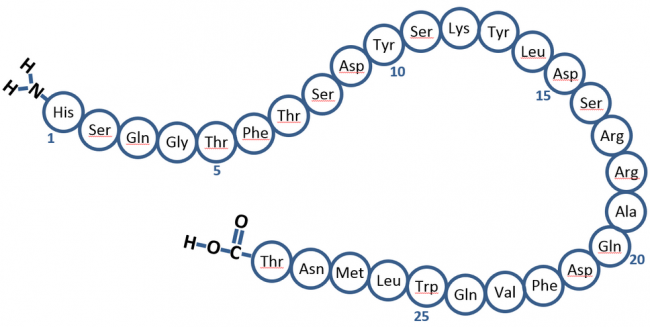

Origin of Oml

Take an amino acid sequence

Origin of Oml:

Make a prediction

Meta

- Compiled on

Thu, 04 Feb 2016 00:31:24 -0500 - Git:

3d176c6-dirty